In this note we consider an invaluable programming tool, the parser generator. The problem that we want to solve is: how do we parse strings, that is, convert (unstructured) strings, the lowest-level representation of a program text, into (highly structured) representations like expressions, statements, functions etc which can then be compiled or interpreted.

Of course, the problem is much more general and arises in pretty much every large scale system, how do you convert raw data strings, into structured objects that can be manipulated by the rest of the system.

Of course, one can imagine various convoluted algorithms for extracting structure from strings. Indeed, you may well think that the conversion routine depends heavily on the target of the conversion! However, it turns out that we can design a small domain-specific language that describes a large number of the kinds of target structures, and we will use a parser generator that will automatically convert the structure description into a parsing function!

An Arithmetic Interpreter

As a running example, let us build a small interpreter for a language of arithmetic expressions, described by the type

type aexpr =

| Const of int

| Var of string

| Plus of aexpr * aexpr

| Minus of aexpr * aexpr

| Times of aexpr * aexpr

| Divide of aexpr * aexprshown in file (arithInterpreter.ml)0. This expression language is quite similar to what you saw for the random-art assignment, and we can write a simple recursive evaluator for it

let foo x = match x with

| C1 ... -> e1

| C2 ... -> e2

let foo = function

| C1 ... -> e1

| C2 ... -> e2

let rec eval env e = match e with

| Const i -> i

| Var s -> List.assoc s env

| Plus (e1, e2) -> eval env e1 + eval env e2

| Minus (e1, e2) -> eval env e1 - eval env e2

| Times (e1, e2) -> eval env e1 * eval env e2

| Divide (e1, e2) -> eval env e1 / eval env e2Here the env is a (string * int) list corresponding to a list of variables and their corresponding values. Thus, if you run the above, you would see something like

# eval [] (Plus (Const 2, Const 6)) ;;

- : int = 4

# eval [("x",16); ("y", 10)] (Minus (Var "x", Var "y")) ;;

- : int = 6

# eval [("x",16); ("y", 10)] (Minus (Var "x", Var "z")) ;;

Exception: NotFound.Now it is rather tedious to write ML expressions like Plus (Const 2, Const 6), and Minus (Var "x", Var "z"). We would like to obtain a simple parsing function

val parseAexpr : string -> aexprthat converts a string to the corresponding aexpr if possible. For example, it would be sweet if we could get

# parseAexpr "2 + 6" ;;

- : aexpr = Plus (Const 2, Const 6)

# parseAexpr "(x - y) / 2" ;;

- : aexpr = Divide (Minus (Var "x", Var "y"), Const 2)and so on. Lets see how to get there.

Strategy

We will use a two-step strategy to convert raw strings into structured data.

Step 1 (Lexing) : From String to Tokens

Strings are really just a list of very low-level characters. In the first step, we will aggregate the characters into more meaningful tokens that contain more high-level information. For example, we will can aggregate a sequence of numeric characters into an integer, and a sequence of alphanumerics (starting with a lower-case alphabet) into say a variable name.

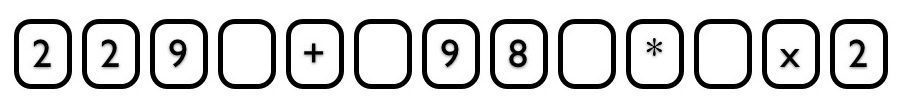

Thus, as a result of the lexing phase, we can convert a list of individual characters

Characters

into a list of tokens

Tokens

Step 2 (Parsing) : From Tokens to Tree

Next, we will use a special description of the structures we are trying to generate called a grammar to convert the list of tokens into a tree-like representation of our final structure:

- : aexpr = Plus (Const 229, Times (Const 98, Var "x2"))The actual algorithms for converting from lists of tokens to trees are very subtle and sophisticated. We will omit a detailed description and instead just look at how the structures can themselves be represented by grammars.

Next, we get into the details of our the above strategy, by describing exactly what the lexer and parser (generators) do in terms of their input and output.

Lexers

We will use the tool called ocamllex to automatically obtain a lexer from a high-level description of what the tokens are and what what sequences of characters should get mapped to tokens.

Tokens

The file (arithParser0.mly)1 describes the set of tokens needed to represent our simple language

%token <int> CONST

%token <string> VAR

%token PLUS MINUS TIMES DIVIDE

%token LPAREN RPAREN

%token EOFNote that the first two tokens, CONST and VAR also carry values with them, respectively int and string.

Regular Expressions

Next, we must describe the sequences of characters that get aggregated into a particular token. This is done using (regular expressions)7 defined in the file (arithLexer.mll)2.

{ open ArithParser }

rule token = parse

| eof { EOF }

| [' ' '\t' '\r' '\n'] { token lexbuf }

| ['0'-'9']+ as l { CONST (int_of_string l) }

| ['a'-'z']['A'-'z']* as l { VAR l }

| '+' { PLUS }

| '-' { MINUS }

| '*' { TIMES }

| '/' { DIVIDE }

| '(' { LPAREN }

| ')' { RPAREN }the first line at top simply imports the token definitions from arithParser.mly. Next, there is a sequence of rules of the form | <regexp> {ml-expr}.

Intuitively, each regular expression describes a sequence of characters, and when that sequence is matched in the input string, the corresponding ML expression is evaluated to obtain the token that is to be returned on the match. Let’s see some examples,

| eof { EOF }

| '+' { PLUS }

| '-' { MINUS }

| '*' { TIMES }

| '/' { DIVIDE }

| '(' { LPAREN }

| ')' { RPAREN }- when the

eofis reached (i.e. we hit the end of the string), a token calledEOFis generated, similarly, when a character+,-,/etc. are encountered, the lexer generates the tokensPLUS,MINUS,DIVIDEetc. respectively,

| [' ' '\t' '\r' '\n'] { token lexbuf }[c1 c2 ... cn]where eachciis a character denotes a regular expression that matches any of the characters in the sequence. Thus, the regexp[' ' '\n' '\t']indicates that if either a blank or tab or newline is hit, the lexer should simply ignore it and recursively generate the token corresponding to the rest of the buffer,

| ['0'-'9']+ as l { CONST (int_of_string l) }['0' - '9']denotes a regexp that matches any digit-character. When you take a regexp and put a+in front of it, i.e.e+corresponds to one-or-more repetitions ofe. Thus, the regexp['0'-'9']+matches a non-empty sequence of digit characters! Here, the variablelholds the exact substring that was matched, and we simply writeCONST (int_of_string l)to return theCONSTtoken carrying theintvalue corresponding to the matched substring.

| ['a'-'z']['A'-'z' '0'-'9']* as l { VAR l }e1 e2denotes a regexp that matches any stringsthat can be split into two partss1ands2(s.t.s == s1 ^ s2) wheres1matchese1ands2matchese2. That is,e1 e2is a sequencing regexp that first matchese1and then matchese2.e*corresponds to zero-or-more repetitions ofe. Thus,['a'-'z']['A'-'z' '0'-'9']*is a regexp that matches all strings that begin with a lower-case alphabet, and then have a (possibly empty) sequence of alpha-numeric characters. As before, the entire matching string is bound to the variableland in this case theVAR ltoken is returned indicating that an identifier appeared in the input stream.

Running the Lexer

We can run the lexer directly to look at the sequences of tokens found. The function Lexing.from_string simply converts an input string into a buffer on which the actual lexer operates.

# ArithLexer.token (Lexing.from_string "+");;

- : ArithParser.token = ArithParser.PLUS

# ArithLexer.token (Lexing.from_string "294");;

- : ArithParser.token = ArithParser.CONST 294Next, we can write a function that recursively keeps grinding away to get all the possible tokens from a string (until it hits eof). When we call that function it behaves thus:

# token_list_of_string "23 + + 92 zz /" ;;

- : ArithParser.token list =

[ArithParser.CONST 23; ArithParser.PLUS; ArithParser.PLUS;

ArithParser.CONST 92; ArithParser.VAR "zz"; ArithParser.DIVIDE]

# token_list_of_string "23++92zz/" ;;

- : ArithParser.token list =

[ArithParser.CONST 23; ArithParser.PLUS; ArithParser.PLUS;

ArithParser.CONST 92; ArithParser.VAR "zz"; ArithParser.DIVIDE]Note that the above two calls produce exactly the same result, because the lexer finds maximal matches.

# token_list_of_string "92z" ;;

- : ArithParser.token list = [ArithParser.CONST 92; ArithParser.VAR "z"]Here, when it hits the z it knows that the number pattern has ended and a new variable pattern has begun. Of course, if you give it something that doesn’t match anything, you get an exception

# parse_string "%" ;;

Exception: Failure "lexing: empty token".Parsers

Next, will use the tool called ocamlyacc to automatically obtain a parser from a high-level description of the target structure called a grammar. (Note: grammars are very deep area of study, we’re going to take a very superficial look here, guided by the pragmatics of how to convert strings to aexpr values.)

Grammars

A grammar is a recursive definition of a set of trees, comprising

Non-terminals and Terminals, which describe the internal and leaf nodes of the tree, respectively. Here, the leaf nodes will be tokens.

Rules of the form

nonterm :

| term-or-nonterm-1 ... term-or-non-term-n { Ocaml-Expr }that describe the possible configuration of children of each internal node, together with an Ocaml expression that generates a value that is used to decorate the node. This value is computed from the values decorating the respective children.

We can define the following simple grammar for arith expressions:

aexpr:

| aexpr PLUS aexpr { Plus ($1, $3) }

| aexpr MINUS aexpr { Minus ($1, $3) }

| aexpr TIMES aexpr { Times ($1, $3) }

| aexpr DIVIDE aexpr { Divide ($1, $3) }

| CONST { Const $1 }

| VAR { Var $1 }

| LPAREN aexpr RPAREN { $2 }Note that the above grammar (almost) directly mimics the recursive type definition of the expressions. In the above grammar, the only non-terminal is aexpr (we could call it whatever we like, we just picked the same name for convenience.) The terminals are the tokens we defined earlier, and each rule corresponds to how you would take the sub-trees (i.e. sub-expressions) and stitch them together to get bigger trees.

The line %type <ArithInterpreter.aexpr> aexpr at the top stipulates that each aexpr node will be decorated with a value of type ArithInterpreter.aexpr – that is, by a structured arithmetic expression.

Next, let us consider each of the rules in turn.

| CONST { Const $1 }

| VAR { Var $1 }- The base-case rules for

CONSTandVARstate that those (individual) tokens can be viewed as corresponding toaexprnodes. Consider the target expression in the curly braces.

Here$1denotes the value decorating the 1st (and only!) element of the corresponding non/terminal- sequence. That is, for the former (respectively latter) case$1theint(respectivelystringvalue) associated with the token, which we use to obtain the base arithmetic expressions via the appropriate constructors.

| aexpr PLUS aexpr { Plus ($1, $3) }

| aexpr MINUS aexpr { Minus ($1, $3) }

| aexpr TIMES aexpr { Times ($1, $3) }

| aexpr DIVIDE aexpr { Divide ($1, $3) }- The inductive case rules, e.g. for the

PLUScase says that if there is a token-sequence that is parsed into anaexprnode, followed by aPLUStoken, followed by a sequence that is parsed into anaexprnode, then the entire sequence can be parsed into anaexprnode. Here$1and$3refer to the first and third elements of the sequence, that is, the left and right subexpressions. The decorated value is simply the super-expression obtained by applying thePlusconstructor to the left and right subexpressions. The same applies to

| LPAREN aexpr RPAREN { $2 }- The last rule allows us to parse parenthesized expressions; if there is a left-paren token followed by an expresssion followed by a matching right-paren token, then the whole sequence is an

aexprnode. Notice how the decorated expression is simply$2which decorates the second element of the sequence, i.e. the (sub) expression being wrapped in parentheses.

Running the Parser

Great, lets take our parser out for a spin! First, lets build the different elements

$ cp arithParser0.mly arithParser.mly

$ make clean

rm -f *.cm[io] arithLexer.ml arithParser.ml arithParser.mli

$ make

ocamllex arithLexer.mll

11 states, 332 transitions, table size 1394 bytes

ocamlyacc arithParser.mly

16 shift/reduce conflicts.

ocamlc -c arithInterpreter.ml

ocamlc -c arithParser.mli

ocamlc -c arithLexer.ml

ocamlc -c arithParser.ml

ocamlc -c arith.ml

ocamlmktop arithLexer.cmo arithParser.cmo arithInterpreter.cmo arith.cmo -o arith.topNow, we have a specialize top-level with the relevant libraries baked in. So we can do:

$ rlwrap ./arith.top

Objective Caml version 3.11.2

# open Arith;;

# eval_string [] "1 + 3 + 6" ;;

- : int = 10

# eval_string [("x", 100); ("y", 20)] "x - y" ;;

- : int = 80And lo! we have a simple calculator that also supports variables.

Precedence and Associativity

Ok, looks like our calculator works fine, but lets try this

# eval_string [] "2 * 5 + 5" ;;

- : int = 20Huh?! you would think that the above should yield 15 as * has higher precedence than + , and so the above expression is really (2 * 5) + 5. Indeed, if we took the trouble to put the parentheses in, the right thing happens

# eval_string [] "(2 * 5) + 5" ;;

- : int = 15Indeed, the same issue arises with a single operator

# eval_string [] "2 - 1 - 1" ;;

- : int = 2What happens here is that the grammar we gave is ambiguous as there are multiple ways of parsing the string 2 * 5 + 5, namely

Plus (Times (Const 2, Const 5), Const 5), orTimes (Const 2, Plus (Const 5, Const 5))

We want the former, but ocamlyacc gives us the latter! Similarly, there are multiple ways of parsing 2 - 1 - 1, namely

Minus (Minus (Const 2, Const 1), Const 1), orMinus (Const 2, Minus (Const 1, Const 1))

Again, since - is left-associative, we want the former, but we get the latter! (Incidentally, this is why we got those wierd grumbles about shift/reduce conflicts when we ran ocamlyacc above, but lets not go too deep into that…)

There are various ways of adding precedence, one is to hack the grammar by adding various extra non-terminals, as done here (arithParser2.mly)[5]. Note how there are no conflicts if you use that grammar instead.

However, since this is such a common problem, there is a much simpler solution, which is to add precedence and associativity annotations to the .mly file. In particular, let us use the modified grammar (arithParser1.mly)3.

$ cp arithParser1.mly arithParser.mly

$ make

ocamllex arithLexer.mll

11 states, 332 transitions, table size 1394 bytes

ocamlyacc arithParser.mly

ocamlc -c arithInterpreter.ml

ocamlc -c arithParser.mli

ocamlc -c arithLexer.ml

ocamlc -c arithParser.ml

ocamlc -c arith.ml

ocamlmktop arithLexer.cmo arithParser.cmo arithInterpreter.cmo arith.cmo -o arith.topcheck it out, no conflicts this time! The only difference between this grammar and the previous one are the lines

%left PLUS MINUS

%left TIMES DIVIDEThis means that all the operators are left-associative

so e1 - e2 - e3 is parsed as if it were (e1 - e2) - e3. As a result we get

# eval_string [] "2 - 1 - 1" ;;

- : int = 0Furthermore, we get that addition and subtraction have lower precedence than multiplication and division (the order of the annotations matters!)

# eval_string [] "2 * 5 + 5" ;;

- : int = 15

# eval_string [] "2 + 5 * 5" ;;

- : int = 27Hence, the multiplication operator has higher precedence than the addition, as we have grown to expect, and all is well in the world.

This concludes our brief tutorial, which should suffice for your NanoML programming assignment. However, if you are curious, I encourage you to look at (this)6 for more details.